Mixing Sweet Beats in Unity 5.0

One of the big areas of focus for Unity 5.0 has definitely been in audio. After a quiet period of feature development in this area, we have been working hard at taking audio to a first class feature within Unity.

To make this work we first had to take a step back and re-work a lot of the underlying asset pipeline and resource management of audio within Unity. We had to make our codec choices solid and ensure we had a framework that allowed you guys to have a lot of good quality sounds in your game. I’ll try and cover this in detail in a later post, but right now I want to talk about our first big audio feature offering in Unity 5.0, the AudioMixer.

Our First Move

Besides creating a solid foundation for future audio development in Unity, we wanted to give you guys a shiny new feature as our first big ‘we want you to make awesome audio’ offering. Something to show we mean business and want to empower you as best we can on the audio front.

There are a number of areas within the audio system we will be improving over the coming release cycles. Some of them are small and address the little issues that have been outstanding in Unity thus far, things you could consider as fixing the existing feature set. Some of them will be larger, like the ability for users to make amazing interactive sounds, immersive music systems and control to a high detail the mix of the audio soundscape.

Why the AudioMixer?

The question of why we chose the AudioMixer as the first big feature to push in Audio 5.0 can be answered pretty simply. Previously there was no way in which true sub mixing of audio was possible within Unity. Sounds could be played on an AudioSource where effects could be added as Components, from there all the sounds within the game were mixed together at the AudioListener where effects could be added to the entire soundscape.

We decided to address this with the AudioMixer, and while we were there we thought we would take it to the next level, incorporating many features you would find in established Digital Audio Workstation applications.

Sound Categories

As many sound engineers know, it is super useful to be able to combine collections of sounds into categories and apply volume control and effects over the entire category in one place. Hooking up those volumes and effect parameters to game logic is effectively having a master control over an entire area of the game’s soundscape.

This control over the mix of the entire soundscape is super important! Its a fantastic way of controlling the mood and immersion of the audio mix. A good mix and music track can take players through the full range of emotions during gameplay, and create atmospheres that are not possible with graphic flair alone.

Mixing In Unity

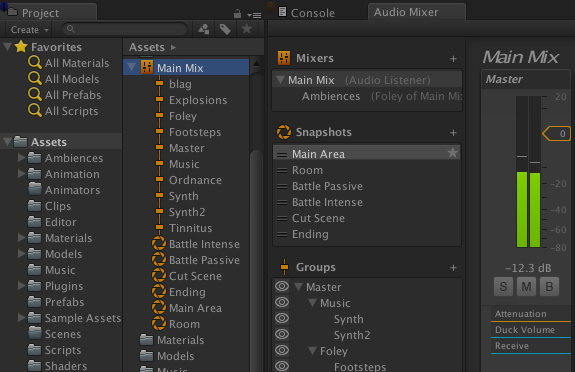

This is the purpose of the AudioMixer. Its an asset that users can incorporate into their scenes that control the overall mix of all the sounds in the game. All the sounds playing in a scene can be routed into one or more AudioMixer which will categorise them and apply all sorts of modifications and effects to the mix of those sounds.

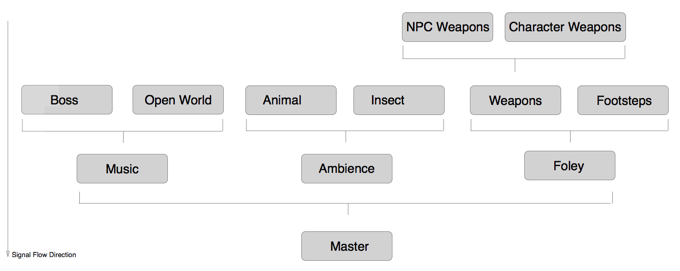

Each AudioMixer can have a hierarchy of categories defined, which in the case of the AudioMixer are called AudioGroups. You also view a lineup of these AudioGroups with a traditional mixing desk layout which many from the music and film industry with be used to.

DSP

The AudioMixer is, of course, more than just setting up mixing hierarchies. As one would expect, each AudioGroup can contain a bunch of different DSP audio effects that are applied sequentially as the signal passes through the AudioGroup.

The AudioMixer is, of course, more than just setting up mixing hierarchies. As one would expect, each AudioGroup can contain a bunch of different DSP audio effects that are applied sequentially as the signal passes through the AudioGroup.

Now we are getting somewhere! Not only can you now create custom routing schemes and mixing hierarchies, but you can put all sorts of DSP goodies anywhere in the signal chain, allowing all sorts of effect options over your soundscapes. You can even add a dry path around effects to allow only a portion of the signal to be processed by it.

But what if you want more DSP control that just the inbuilt effects of Unity? Previously this was handled exclusively with the OnAudioFilterRead script callback, which allowed you to process audio samples directly in your scripts.

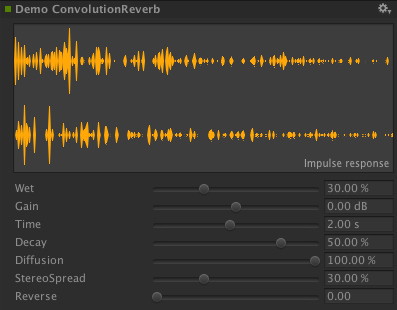

This is great for lightweight effects or prototyping your fancy filter ideas. Sometimes though, you want the ability to write native compiled effects for the best performance. Allowing you to write more heavy weight ideas, perhaps like your custom convolution reverb or multi band EQ.

Unity now also supports custom DSP plugin effects, with users having the ability to write their own native DSP for their game, or perhaps distributing their amazing effect ideas on the Asset Store for others to use. This opens up a whole world of possibilities, from writing your own synth engine to interfacing other audio applications like Pure Data. These custom DSP plugins can also request sidechain support and will be supplied sidechain data from anywhere else in the mix! Hawtness!

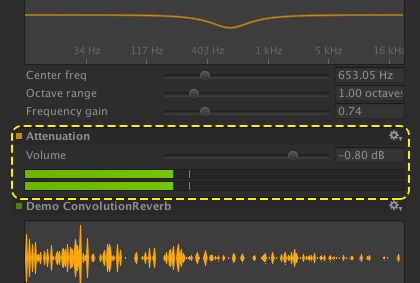

One of the cool things possible with the effect stack in an AudioGroup is that you can apply the groups attenuation anywhere in the stack. You can even boost the signal now as we allow volume levels up to +20dB. The inspector even has a integrated VU meter to show you exactly what is happening with the signal at the point of attenuation.

When combined with non-linear DSP, Sends / Receives and our new Ducking insert (which will be explained later in this post), it becomes a super powerful way of controlling the flow of audio signal through a mix.

Mood Transitions

I talked earlier about controlling the mood of the game with the mix of the soundscape. This can be achieved with bringing in and out new stems of music or ambient sounds. Another common way to achieve this is to transition the state of the mix itself. Changing the volume of sections of the mix and transitioning to different parameter states of effects is an effective way of taking the mood of a player where you want them to go.

Inside all AudioMixers is the ability to define snapshots. Snapshots capture the state of all of the parameters in the AudioMixer. Everything from effect wet levels to AudioGroup pitch levels can be captured and transitioned between.

You can even create complex blend states between a whole bunch of Snapshots within your game, creating all sorts of possibilities and uses.

Imagine walking from an open field section of your map into a sinister cave and have the mix transition to highlight more subtle ambiences, bring in different instruments of your music ensemble and change reverb characteristics of the foley. Imagine setting this up without having to write a line of script code..

Divergent Signal

But the power of Snapshots becomes especially augmented when combined with Sends, Receives and Ducking.

Sends

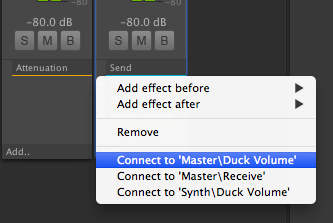

Aside from traditional insert DSP effects available in Unity, you can also insert a “Send” anywhere into the mix. A Send effectively branches the audio signal wherever the Send is inserted, and within Sends you can choose how much of the signal you wish to branch off.

Things are now becoming even more interesting! Given that the level of signal you branch is part of the snapshot systems, you can start to see how you can incorporate signal flow changes with snapshot transitions. From here the potential setup possibilities start snowballing.

But where does this branched signal go? Currently there two options in Unity for a Send to target, Receives and Volume Ducking.

Receives

Receives are fairly straight forward processing units. They are inserts just like any other effect and they simply take all the branched audio from all the Sends that target them and mix it together, passing it off to the next effect in the AudioGroup.

Receives can of course be placed anywhere in a group of effects and the attenuation point of an AudioGroup, which gives huge flexibility on when the branched signal should be introduced to the mix.

Volume Ducking

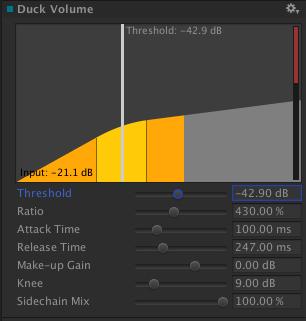

Sends can also target Volume Ducking insert units. Much like Receives, these units can be placed anywhere in the mix alongside your other DSP effects.

When a Send is targeting a Volume Ducking insert, it acts much like a side chain compressor setup, meaning you can side chain from anywhere in the mix and apply volume ducking anywhere else from it!

What does this mean for the layman? Imagine you and mixing your FPS game and you want to avalanche the player with the sound of gunfire and explosions. Fair enough, but what about when you walk up to an NPC in the field of battle and you need to hear the sagely words they utter? Volume Ducking allows you to dynamically lower the volume of entire sections of the mix (in this case, all the ordnance sounds) off other sections of the mix (the NPC talking). You simply have to Send from the AudioGroup containing all the NPC dialog to a Volume Ducking unit on the Ordnance AudioGroup.

You could even apply side chain compression to your musical setup dynamically, having the rest of your instruments compressed off the bass track.

The best thing is you can set this all up in the editor without a line of code!

Parting Words

Even though I have really only scratched the surface of possibilities the AudioMixer provides in this post, I hope its enough to sparks peoples interest in the possibilities of audio in Unity 5.0.

Unity 5.0 and beyond we really want to push the future of audio in games, giving you the suite of tools you need to make awesome sounding stuff!

Let us know your thoughts!

The Audio Team

PS: Wayne will talk about New Audio Radness in Unity 5.0 at Unite 2014, August 22, 13:30-14:30. See you there!

Bonus Video: Beat mixing in Unity!

Is this article helpful for you?

Thank you for your feedback!

- Unity Labs

- Copyright © 2024 Unity Technologies